IBM defines AI Ethics as a set of guidelines that advise on the design and outcomes of artificial intelligence.

Human ethics are guided by social mores, laws, and internal triggers like guilt that work in unison together to align people with commonly held ethics. There is a growing consensus calling for AI systems to be bound to their own ethical guidelines.

However, as artificial intelligence (AI) becomes a closer match to human capabilities, the concern is that AI is outpacing human’s ability to control it with an ethical framework.

Why AI Ethics are Important to Implement?

AI’s vast capabilities can have consequences if there are no principles governing the creation process.

While AI has remarkable potential to increase accessibility for people with physical and mental impairments, AI unchecked can also cause harm. AI-based software designed without ethical consideration has already wreaked havoc for different groups of people. For example, research done by MIT & IBM has found that AI-based loan approval software discriminates against disadvantaged economic brackets with historically restricted credit access.

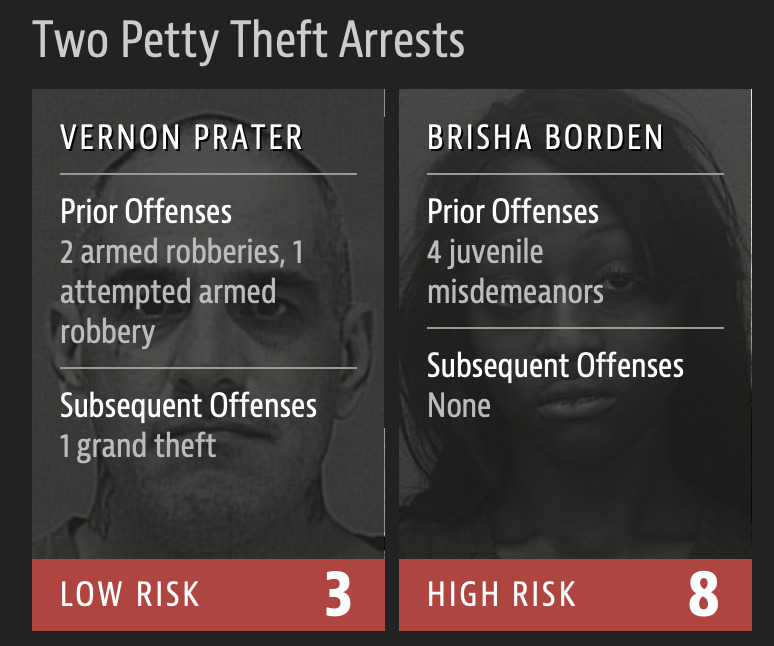

Another current example of the consequences of using non-ethically regulated AI shows up in our courtrooms. A recent Pro Publica study revealed racial bias in the AI models judges use to predict recidivism. Black defendants were… 77% more likely to be pegged as at higher risk of committing a future violent crime and 45% more likely to be predicted to commit a future crime of any kind.

Algorithms created without ethical consideration have also contributed to the political divide in America. In a study from Berkley, researchers found that YouTube’s recommendation algorithm influences viewers of content to watch more similar content than they otherwise would and is less likely to present alternative views. This can compound beliefs and further entrench individuals on their sides.

Can the person behind this algorithm be held responsible for increasing our nation’s polarization? If AI is created recklessly, amplifies political or racial biases, and contributes to radicalization – who is accountable?

How can you enforce AI Ethics?

AI Ethics introduces the dual concept of a creator’s accountability vs. a machine or AI’s accountability. When humans are held ethically responsible, AI and machines are the objects. When machines & AI are responsible, they are the subjects.

How do we define the line between who owns the ethical responsibility for the behavior? For example, in a self-driving car that gets in an accident, can the car be ticketed for driving too closely, or is this the person’s fault?

Regardless of the complicated logistics determining who or what receives the consequence, countries that have chosen to stand up a code of AI ethics may or may not choose to enforce it. In cases where AI Ethics are viewed as more of a “soft” law, the reason is often fear of stifling innovation.

To enforce AI Ethics, you must first define what those ethics are.

Let’s consider some of the better-known ethical guidelines used for AI and what passing these rules looks like at a national and continental level.

Who gets to decide what AI Ethics are?

Much like regular ethics, AI ethics are not universal. Many organizations have attempted to create AI ethical guidelines that determine the line between helpful behavior and manipulation of free will. These are a few of the more influential and recognized codes of conduct organizations subscribe to when creating their own codes.

The Three Laws of Robotics

This is one of the first ethical AI codes created. In 1942 Isaac Asimov decided upon three laws. They are:

- A robot can not injure a human or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given by humans except where such orders would conflict with the First Law.

- A robot must protect its existence as long as such protection does not conflict with the First or Second Laws.

The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research

In 1979 The National Commission for the Protection of Human Subjects of

Biomedical and Behavioral released their ethical guidelines for research, and while lab-focused at the time, it has been broadly applied to experiments and research on humans through AI technology.

Its three main principles are:

- Respect for individuals and consent around the risks and benefits of an experiment and the ability to opt-out at any time

- Do no harm

- Justice – essentially equal distribution of the burdens and benefits from the experiment (some people

IBM’s multidisciplinary, multidimensional approach to trustworthy AI

IBM also has three main principles, with some overlap in values.

- The purpose of AI is to augment human intelligence and benefit humanity

- Your data is yours, and data policies should be transparent

- Technology should be explainable, and users should have access to what goes into algorithms and AI training

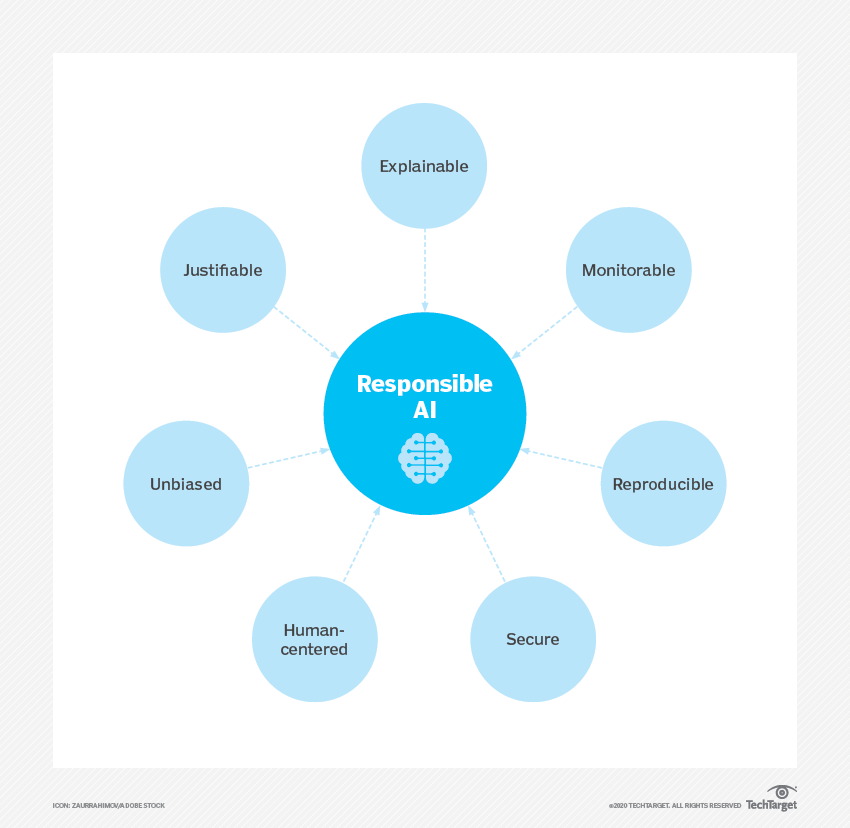

TechTarget’s Principles of Responsible AI

TechTarget defines AI Ethics as containing the following five components:

- Shared code repositories

- Approved model architectures

- Sanctioned variables

- Established bias testing methodologies to help determine the validity of tests for AI systems

- Stability standards for active machine learning models to make sure AI programming works as intended

Image Source: Tech Target

AI Ethics in the real world

We can talk about AI Ethics, in theory, but where do we go from philosophical to actual? While the world leaders have not agreed upon a set of ethical AI standards to roll out globally, there are successful examples of specific countries setting and enforcing these standards. These ethics are most successfully implemented when channeled through legal systems with fines and consequences.

Although not AI-specific, the General Data Protection Regulation (GDPR) passed by the EU in 2016 laid the groundwork for a model of how to enforce ethics around intangible tools that impact human behavior. GDPR gave individuals control and rights over their own personal data. Before this, individuals had no power over their data being sold, their activity being tracked with cookies, or any knowledge of algorithms they were subjected to.

This decision marked a paradigm shift with other countries adopting similar laws, including Turkey, Mauritius, Chile, Japan, Brazil, South Korea, South Africa, Argentina, Kenya, the UK (since Brexit), and California.

These regulations have required businesses to consider the ethics around using and storing individuals’ personal data (PII) and the importance of informed consent.

In 2021, the EU also led the way with an AI-specific, EU Artificial Intelligence Act. This is the first act of its kind to set standards for AI-driven products and rules for its development, trade, and use.

Hopefully, several other countries will follow short order, but at the very least they will have to conform to these standards to sell their AI-based products anywhere in the EU.

While the ethics regarding AI may evolve along with the technology, AIOps today is still (thankfully) primarily human-driven. Once we cross into the superintelligent level where AI and machines can be considered an autonomous ethical subject responsible for their actions, AI Ethics will expand to include countless other considerations and implications.

AI Ethics in Network Monitoring

AIOPs have an ethical application and use case in the NPM problem space. While many workforces are rightfully concerned about being replaced by AI advancements, AI is the network world signals improvement, not displacement. AI in IT monitoring environments can streamline complex networks, automate specific tasks, and perform threat detection without human involvement. AI can be applied to operations management to simplify IT’s role in oversight and get to the root cause faster.

While more performance monitoring tools have started incorporating AI and ML, there seems to be a learning curve with their application. In a recent EMA survey, 59% of enterprises did not feel like they were effectively evaluating the AIOps solutions they applied to their network management.

Layering AI over performance monitoring can provide intelligent environment analysis and response and help engineers look ahead with predictive analytics. AI and ML can examine historical data and provides insights into future network behaviors. With increasing unwieldy and complex networks, AI can deliver a new level of visibility that looks ahead and frees developers from arduous management tasks that can be automated.

About LiveAction

Want to continue the discussion? Request a demo about LiveAction’s AIOps appliance LiveNA. We apply machine learning and heuristics to network datasets for advanced anomaly detection and predictive analytics for deeper network understanding.