What is Network Monitoring

Network monitoring is a technique that uses a tool or combination of tools to track data in a network. Network monitoring tools usually rely on packet capture or flow analysis for their data. The data is then analyzed to reveal visibility into operations like

- Traffic

- Bandwidth utilization

- Uptime

Network monitoring allows organizations to set a baseline for typical network performance and receive alerts when traffic deviates from this standard. Several behaviors could trigger alarms, a network component that stops receiving traffic, erratic traffic patterns, packet loss, etc.

What is Network Observability

Network observability is what happens with the data delivered from the monitoring tools. It’s often called the second step of network monitoring, described as what monitoring evolves into. Observability further analyzes the data identified and delivered through monitoring. This next step is characterized by answers to the question, “We see the problem. Now what?”

Observability takes on:

- Identifying root causes of network issues

- Discovering any trends or cycles historically that are indicators of this type of network event

- Developing intelligent and complex alerting that can consider multiple factors pointing to – and predicting – event recurrence, SLA breaches, and network component failures

Monitoring First … Observability Second

Monitoring provides the necessary output data for observability to draw inferences from. You may think, “observability is just another buzzword term that I now have to contend with.”

It isn’t so. These words do not mean the same thing. Let’s step out of the network to see how monitoring and observability play out in a different scenario.

Scenario 1

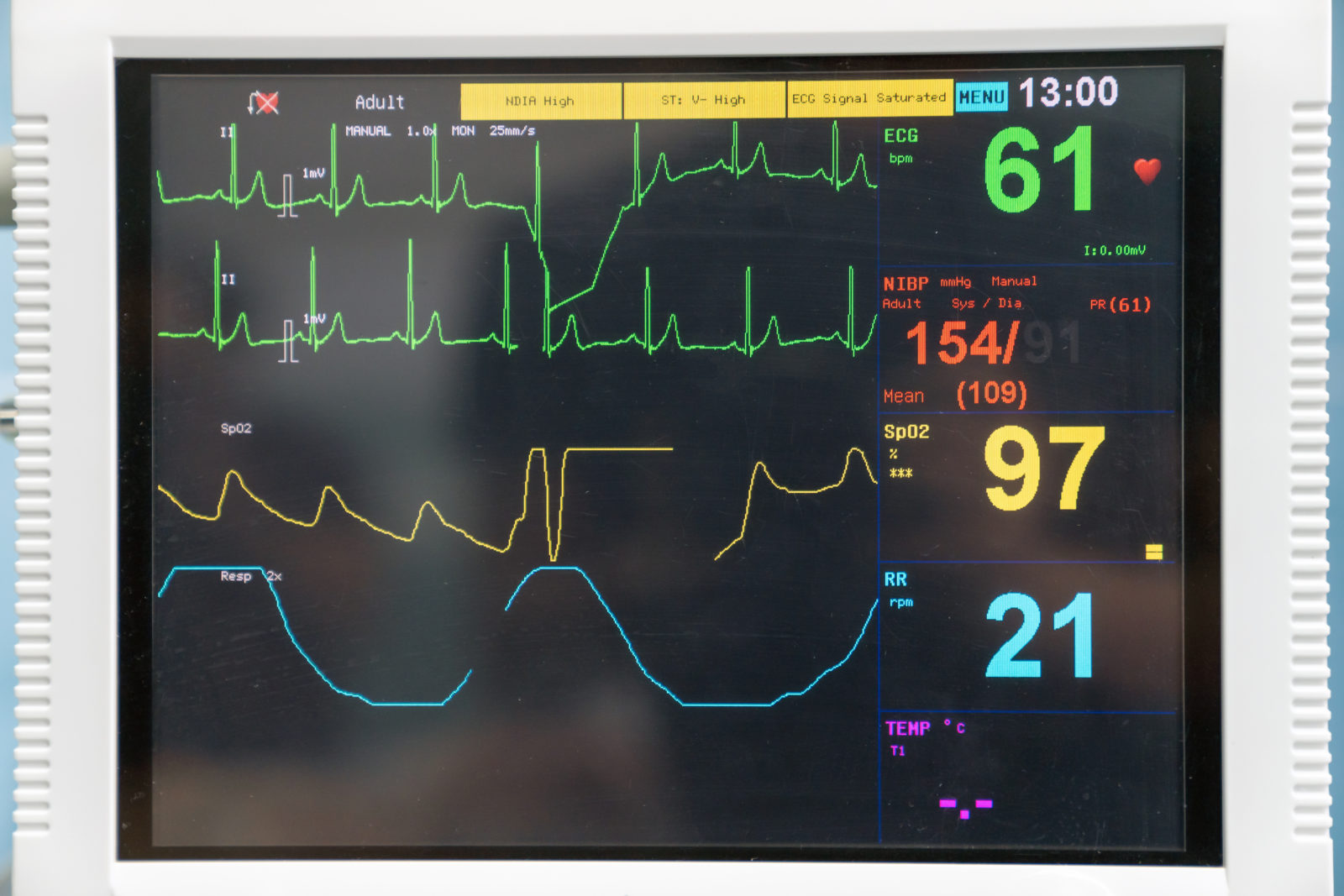

When someone experiences heart pain or a racing heart, they may go to the hospital. An EKG can look for irregular patterns, track the beats per second, and alert when the conditions pass specific metrics.

This machine relays what is happening in real-time and has alarms that alert the doctor when something goes wrong.

Here’s where observation comes in. The doctor observes the data from this machine, along with other indicators like test results, patient appearance, and medical history, to diagnose the cause of the heartbeat aberrations. This diagnosis is also used to predict the patient outcome and the course of treatment.

It’s the same process when working with network and application health. You begin with monitoring and end in observation.

Gartner agrees with this explanation, defining data observability as an extension of data monitoring, the conclusion, or insights phase in a 2020 publication. Monitoring consumes information passively, while observability makes sense of it actively.

Monitoring Watches. Observability Notices.

Network Monitoring analyzes network trends to reveal latency, jitter, or congested traffic. It detects network events through threshold alerts and sees when things go wrong.

Network Observability uses the system’s output results to tell you what is causing this issue. Observability goes further, determining root-cause analysis. It takes raw data from packet capture and chews those over, resulting in a holistic, comprehensive understanding of the event.

With this stage of comprehension, teams not only understand what preceded the network disruption but identify the indicators that will predict its future occurrence.

Scenario 2

Going through school, I remember that the correct answer on my math test was not enough. I had to show my work, or it was a big -2.

Monitoring and observability are like that too. You have arrived at what is wrong, but understanding the “work” that got you there shows NetOps teams exactly what changes they need to make to avoid and predict this network event in the future.

Where Observability Needs Quality Monitoring

With quantities of data increasing yearly, monitoring and analyzing these data points can lead to countless “barking dog” alerts and vast volumes of false benign flags. This can cloud the ability of a team to see what is happening and create low observability conditions.

For an organization to properly use observability, monitoring must first be fine-tuned. Without accurate monitoring, observability is not possible. One begets the other. Monitoring tools incorporating AI and ML automation techniques can help reduce excess noise and create an ideal observability environment.

So, Where Does Telemetry Play Into All of This?

Telemetry is neither the monitoring nor the observations leading to insights. Telemetry is the ability to do these things. To say your network has broad telemetry means you can connect the visibility dots between different systems and applications and collect data throughout the network, regardless of its architecture.

Monitoring and Observability

LiveAction boasts the broadest telemetry available on the market. We offer both network monitoring reporting and alerting, and observability through packet tracing with LiveWire and advanced analytic processing.

Our total solution pulls together flow, intelligent packet capture, SNMP, and API data metrics into a unified live visual display. LiveAction uses artificial intelligence and metadata fingerprinting to create enhanced Liveflow, richer than IPFIX, where it is then exported to our flow collector LiveNX, for complete network topology and application visibility.

See differently today. Request an interactive 1:1 demo of our solution.